Navigating Data Security of Gen AI

The sudden rise and rapid adoption of generative AI has sparked excitement and apprehension. While this groundbreaking technology holds immense promise for various industries, data security and privacy concerns have loomed large. As organizations delve deeper into harnessing the power of generative AI, navigating the ethical and security implications becomes paramount. This blog guides you through the complexities that Gen AI brings to your enterprise’s security and privacy compliance, offering solutions to help resolve these challenges.

Understanding Gen AI

Generative AI refers to a category of artificial intelligence that focuses on creating new content rather than simply analyzing existing data or making predictions. Unlike traditional AI, which is often tasked with classification, recognition, or prediction tasks, Generative AI models are trained on large datasets to generate novel content. The potential applications seem boundless, from generating realistic images to crafting human-like text, audio, and videos.

The Promise and the Peril

The promise of generative AI lies in its capacity to create vast amounts of content, from artwork to text, with incredible speed and efficiency. According to Gartner studies, by 2027, over 50% of enterprise GenAI models will be domain-specific, up from about 1% in 2023. This highlights AI’s pivotal role in fostering expression, innovation, and automation across industries.

However, alongside its potential benefits, generative AI raises essential ethical and privacy considerations, from protecting training data and models from inadvertently memorizing sensitive information to the authenticity of generated content and the risk of misuse, such as deep fake videos or misinformation.

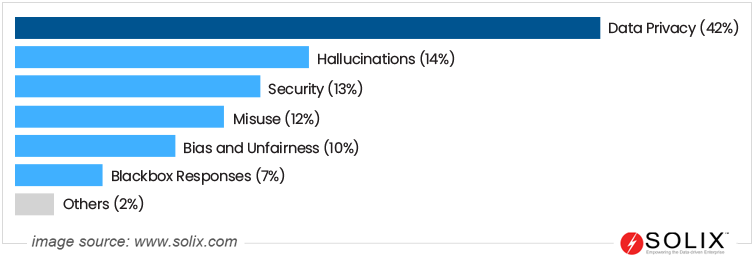

The chart below depicts Gartner’s findings on IT executives’ concerns over Gen AI. They also predict that utilizing externally hosted LLM and GenAI models heightens risks, prompting enterprises to invest in GenAI security controls.

Best Data Security Practices for Gen AI

Generative AI presents exciting possibilities but introduces unique security challenges. Forrester’s data from 2023 indicates that 53% of AI decision-makers whose organizations have implemented policies concerning AI are refining their AI governance programs to back various Gen AI applications. Here are some best practices to ensure data security in generative AI development:

- Data Minimization: Only collect data necessary to train your generative AI model. Avoid storing unnecessary data that could be a target for breaches. Use anonymization techniques like data masking or differential privacy before feeding real-world data to the model.

- Use Synthetic Data: Combining data masking with synthetic data generation allows organizations to create artificial datasets that maintain statistical properties while safeguarding sensitive information. Gartner predicts a rise in businesses using generative AI for synthetic customer data, reaching 75% by 2026.

- Policy and Governance: Create comprehensive internal policies within your organization to handle emerging risks and security challenges associated with generative AI. Once developed, the policies governing the appropriate use of generative AI technologies will be enforced continually.

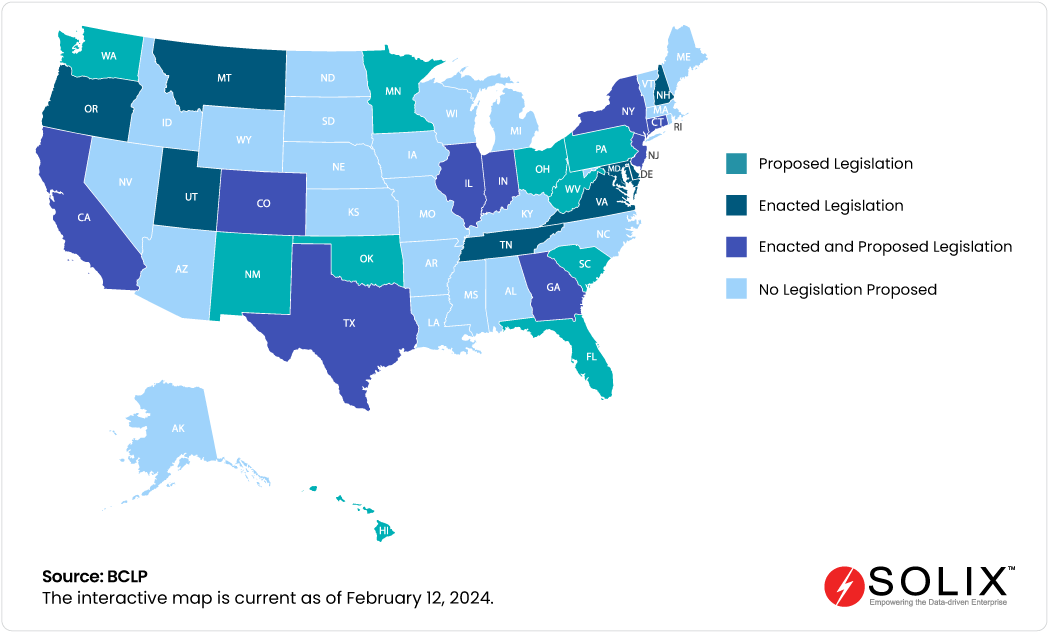

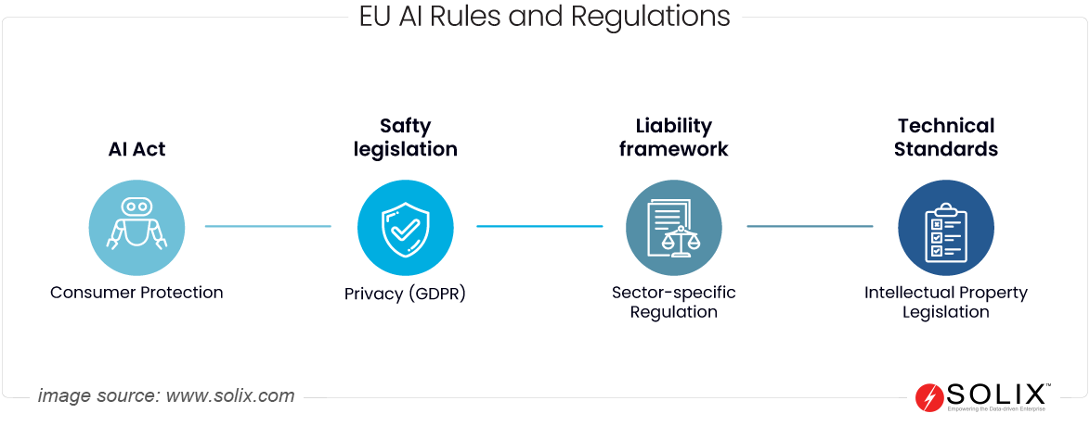

- Compliance Regulation: As AI regulations evolve, stay informed about relevant industrial and privacy laws like the EU AI Act, GDPR, etc, and ensure your practices comply. Forrester noted over 190 AI-related bills in 2023, showing increasing emphasis on compliance.

Data Masking Usecases in Generative AI

Data masking is a crucial strategy for safeguarding privacy and security in the landscape of generative AI. Here’s why data masking is of paramount importance in the context of generative AI:

- Preserving Privacy and Confidentiality: Data masking anonymizes generative AI models’ training datasets by replacing identifiable attributes with random tokens or pseudonyms. This helps organizations preserve individuals’ privacy while enabling the model to learn from the underlying patterns in the data.

- Statistically Sound AI Training Data: Data masking preserves the original dataset’s statistical properties and patterns while safeguarding sensitive information. This ensures that the synthetic data generated reflects a diverse sample, promoting unbiased and ethical AI outcomes.

- Safeguard Third-Party Collaboration: Gen AI’s rise prompts concerns about increased data sharing, risking non-public enterprise data and third-party software security. Compromised third-party apps with Gen AI API may grant unauthorized access.

- Enhancing Regulatory Compliance: Using masked data for training showcases commitment to data privacy and responsible AI development. For example, the EU AI Act 2023 underscores global acceptance of responsible AI practices.

A key challenge in data masking for generative AI is striking the right balance between preserving utility and protecting privacy. Advanced masking techniques, such as homomorphic encryption or synthetic data generation, can help achieve this balance by protecting the statistical properties of the original data while ensuring that sensitive information remains obscured.

In conclusion, users may prioritize convenience and innovation, but organizations must uphold the principles of data security to protect sensitive information from falling into the wrong hands. Let us strive to forge connections that uphold the principles of integrity, privacy, and security in the age of generative AI by using data security solutions like SOLIXCloud Data Masking to ensure a future where innovation thrives in harmony with ethical considerations.

Vishnu Jayan is a tech blogger and Senior Product Marketing Executive at Solix Technologies, specializing in enterprise data governance, management, security, and compliance. He earned his MBA from ICFAI Business School Hyderabad. He creates blogs, articles, ebooks, and other marketing collateral that spotlight the latest trends in data management and privacy compliance. Vishnu has a proven track record of driving leads and traffic to Solix. He is passionate about helping businesses thrive by developing positioning and messaging strategies for GTMs, conducting market research, and fostering customer engagement. His work supports Solix’s mission to provide innovative software solutions for secure and efficient data management.