The Missed Costs of Insufficient Data Lake Planning

Data lakes and modern data platforms promise the ability to ingest, process, and store massive volumes of unstructured, semi-structured, and structured datasets in a unified, centralized repository. However, in scenarios where projects and teams lack clear objectives and comprehensive implementation planning, the investments can soon turn into very expensive project failures.

This blog discusses how insufficient planning manifests itself into poorly planned architecture that often doesn’t deliver much commercial value alongside a lack of scalability and limited integration, ultimately resulting in an implementation failure.

Understanding Data Lakes

A data lake is a modern approach to data storage that can ingest data in its native format in a schema-agnostic manner without needing much processing. Unlike traditional data warehouses, data lakes allow for a schema-on-read approach, which essentially means processing and compute-heavy transformations can be postponed until downstream applications necessitate them. This flexibility allows data teams to stage data resources for use cases beyond traditional analytics like machine learning and AI.

However, without a defined plan, this flexibility is certain to lead to chaos, resulting in a failed data lake implementation–in other words, a “data swamp.”

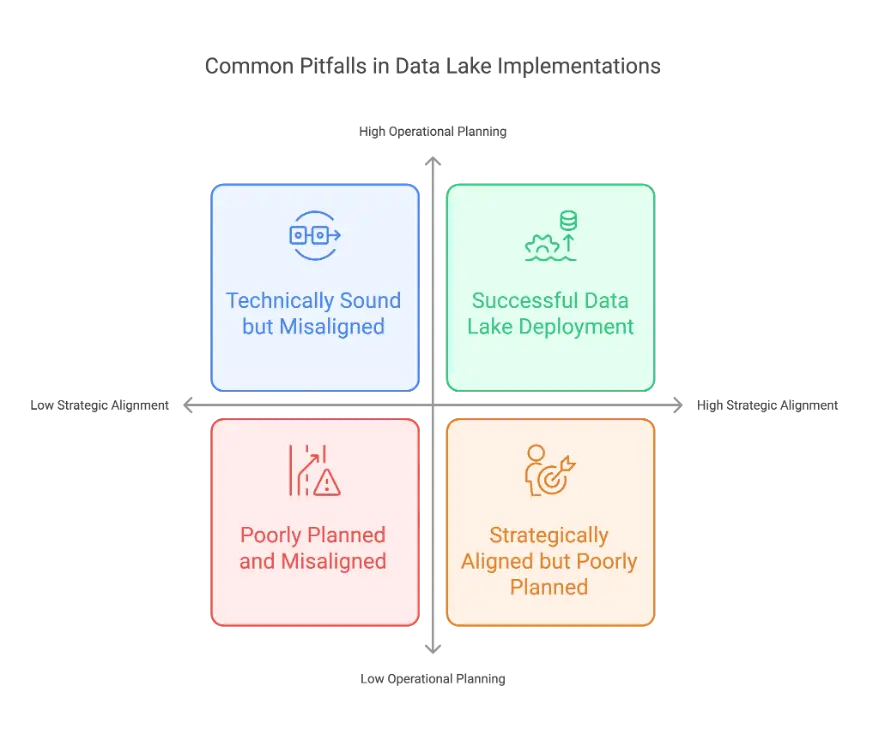

The Pitfalls of Insufficient Planning

A Poorly Planned Data Architecture

Every IT project should begin with defining clear goals and objectives. When an implementation begins without underwritten objectives, the resulting architecture often lacks the necessary cohesion. Inadequate data lake planning often results in:

- Fragmented Storage: Without a defined structure, the data might be stored haphazardly, which would complicate data access, making it difficult for users to access and retrieve relevant data and insights.

- Ineffective Metadata Management: Data catalogs play a significant role in ensuring data lake success. A well-planned data lake would certainly include a robust metadata management practice backed by a comprehensive data catalog. Metadata allows users to know their data better. Without effective metadata management, risks of moving towards a “data swamp,” where insights often get buried.

- Poor Data Quality: Without proper planning, teams often fall into the dark, with little clarity on what data is going into the data lake. This ambiguity leads to inconsistent data formats and unreliable data entries, ultimately compromising the integrity and usability of the entire system.

Lack of Scalability in Design

A design that fails to account for future growth is doomed to struggle as data volumes expand. Increasing data volumes mandates higher storage volumes and compute requirements. Insufficient planning in this area typically leads to:

- Resource Bottlenecks: The initial architecture may be unable to scale horizontally or vertically, resulting in slow performance and system downtime. This often leads to delayed, perhaps stale insights in a business environment that thrives on the currency of data.

- High Future Costs: A poor initial plan that doesn’t factor in growth and change in business requirements often falls short of meeting business expectations. Retrofitting a system for scalability after the deployment complicates processes and can be far more expensive than designing it to handle growth from the outset.

Due considerations should be made to ensure scalability, storage, and compute requirements are taken care of right from the planning stage. This would prevent bottlenecks while ensuring your data lake can evolve with your organization’s growing needs.

Insufficient Consideration of Future Needs and Requirements

Data teams often become myopic with their data lake implementation plans. While it’s vital to address current needs, future requirements and evolving objectives should be taken care of. Failure to do so could result in:

- Limited Flexibility: With evolving requirements, the data lake may not fully support future analytics or business intelligence requirements, leaving data teams and end users unable to extract actionable insights timely.

- Missed Integration Opportunities: Without anticipating future workflows or emerging technologies, your data lake might not be able to integrate seamlessly with other systems and applications. This would increase your lead time for insights, translating to lost dollars in opportunity costs.

Strategic planning incorporating current and future business objectives is critical for building a resilient data infrastructure.

Limited Integration with Existing Workflows and Legacy Systems

A large organization has numerous historical data sources and legacy systems that data teams might want to connect to the new data lake. Still, when planning isn’t done right, much focus is given to technical implementations while overlooking existing workflows and dependencies on legacy systems that need to be carefully mapped to avoid disruptions to operations. Any oversight here could lead to

- Siloed Data: When the data lake isn’t mapped correctly, misaligning with existing workflows, chances are that mission-critical data would remain isolated, creating disparate siloes and undermining the goal of creating a single source of truth within your organization.

- Operational Inefficiencies: Legacy systems often have established processes that must interface with the data lake. Limited integration can disrupt these processes, reducing overall productivity.

Ensuring the data lake is designed with interoperability is a key to a successful implementation.

Consequences of Inadequate Planning

The direct outcomes of insufficient planning in data lake projects are stark:

- Data Swamps: Without clear structure and governance, a data lake can devolve into a data swamp—an unmanageable repository of useless information.

- Escalating Costs: Poor planning often results in unforeseen expenses as the organization struggles to retrofit systems for scalability and integration.

- Missed Business Value: Ultimately, a data lake’s lack of clear objectives and poor design can render it ineffective, preventing the organization from deriving the strategic insights it was meant to provide.

- Misguided Decision Making: Bad planning could result in low-quality data being used in downstream analytics applications, which could deliver faulty insights and lead to misguided decisions.

Best Practices to Avoid Failure

To prevent these pitfalls, organizations should adopt a comprehensive planning approach:

- Define Clear Objectives: Identify the business problems the data lake is meant to solve. Involve key stakeholders from IT, business, and analytics teams to create a unified vision.

- Design for Scalability: Build an architecture that meets current requirements and is flexible enough to scale with future data volumes and usage patterns.

- Integrate with Existing Systems: Plan for seamless integration with legacy systems and existing workflows. This ensures that data flows smoothly across the organization.

- Plan for Governance: Establish strong data governance policies and robust metadata management practices from the start. These measures will help keep the data lake organized and secure.

Implementing these best practices can significantly increase the likelihood of a successful data lake deployment, ensuring that the organization can capitalize on its data initiatives rather than fall victim to planning oversights

Closing Thoughts

Data lakes undoubtedly have immense potential to deliver business value. However, they also possess serious risks of failure if not planned and implemented correctly. During the project planning and scoping stage, teams often fail to address core issues like future compatibility, scalability, integration, and interoperability. By prioritizing planning, scalability, integration, and design, organizations can unlock the true potential of data lakes and modern data platforms, driving the true potential value of data.

Point to remember: A successful data lake implementation begins long before the data starts flowing. It all starts with a clear plan.

Hello there! I am Haricharaun Jayakumar, a senior executive in product marketing at Solix Technologies. My primary focus is on data and analytics, data management architectures, enterprise artificial intelligence, and archiving. I have earned my MBA from ICFAI Business School, Hyderabad. I drive market research, lead-gen projects, and product marketing initiatives for Solix Enterprise Data Lake and Enterprise AI. Apart from all things data and business, I do occasionally enjoy listening to and playing music. Thanks!