ETL (Extract, Transform & Load)

What is ETL?

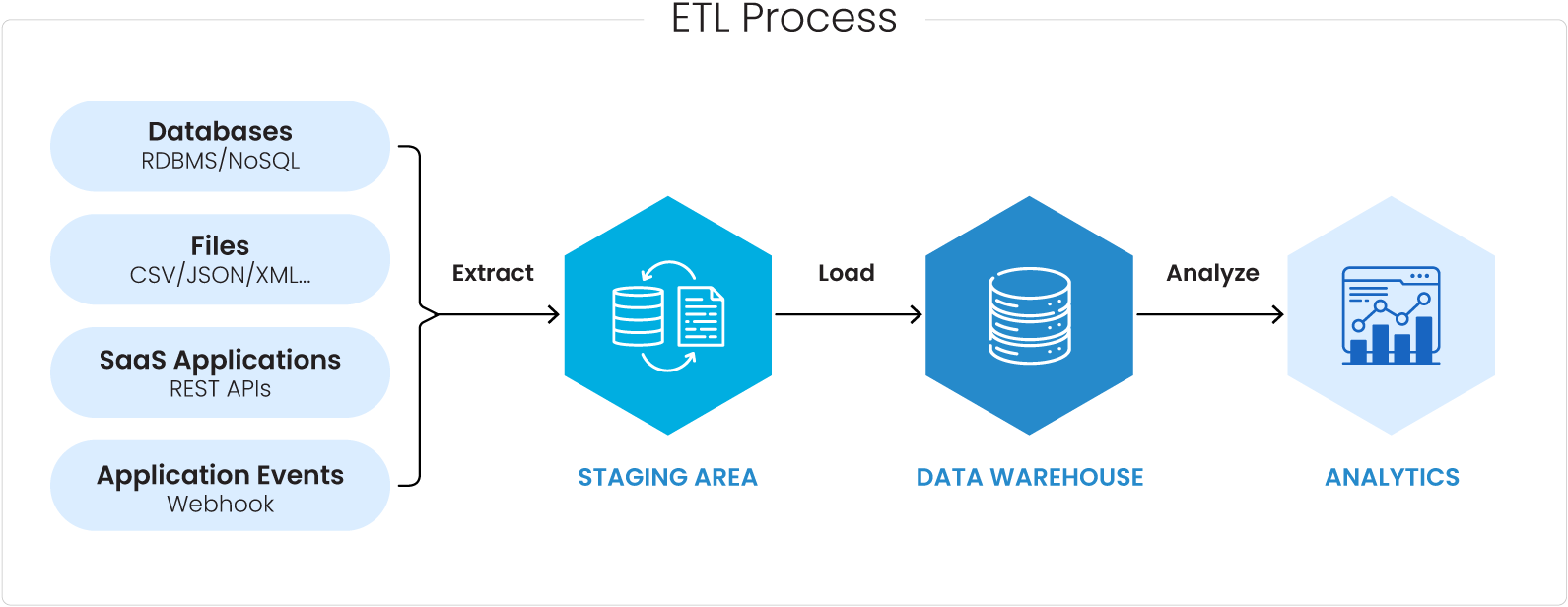

ETL (Extract, Transform, Load) is a critical Data Integration process that ensures the smooth flow of data from various sources to a target destination, typically a data warehouse or other target systems. In an ETL process, data is ingested from multiple sources, and it is cleaned, enriched with metadata, and transformed before it is integrated for use in Data Analytics, BI, and Data Science use cases.

Extract

ETL processes extract data from various sources, including databases (relational and non-relational), APIs, flat files (CSV, Excel), CRM/ERP systems, web pages, and more.

ETL systems often incorporate CDC mechanisms to identify and extract only the data that has changed since the last extraction, reducing processing time and resource requirements.

Transformation:

The transformation phase of ETL contains a series of operations to ensure data quality, consistency, and suitability for analysis. Key aspects of the transformation stage include:

Data Cleansing

- After the data is ingested from various sources, missing data is dealt with by either imputing values, removing records with missing values, or applying statistical methods to estimate missing values.

- Duplicate data is identified and removed to improve data integrity and accuracy while ensuring that data follows a consistent format, unit of measurement, and naming conventions, making it easier to analyze and compare.

- Newer columns or variables are created based on existing data through calculations, concatenations, or other operations. Metadata, categories, or classes are added to the tables for better organization and analysis.

- Data Transformation: Data types are converted to ensure compatibility with the target system, such as changing text to numeric or date formats which is then structured to eliminate redundancy (normalization) or combine data from multiple sources into a table (denormalization.

Load

In the final step, transformed data is loaded into the target destination, often a data warehouse. Loading involves organizing the data in a way that supports efficient querying and analysis.

Key ETL Use Cases

ETL can be seen very useful in the following scenarios

- Data Integration for Business Intelligence: ETL is widely employed to integrate data from diverse sources into a central repository, providing a unified and structured dataset for business intelligence and downstream analytics tools.

- Data Migration and System Upgrades: ETL plays a crucial role in seamlessly transferring data from old systems to new ones. This ensures a smooth transition without data loss or disruption to business operations.

- Regulatory Compliance and Reporting: Organizations can use ETL to aggregate and transform data in a way that complies with industry regulations like GDPR and HIPAA

- Data Quality Improvement: By applying transformations and validation rules during the ETL process, organizations can identify and rectify data anomalies, ensuring high-quality information for analysis and decision-making. This is particularly crucial in industries where data accuracy is paramount, such as finance and healthcare, for regulatory compliance.

- Master Data Management (MDM): ETL supports Master Data Management initiatives by integrating and consolidating master data from various sources. This ensures that there is a single, accurate, and consistent version of key business entities, such as customers or products, across the organization.

Differences between ETL and ELT

The key distinction between ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) lies in the sequence of data processing.

In ETL, data is extracted from source systems, then transformed to meet the desired structure, and finally loaded into the target destination. This approach is beneficial when data transformation requires significant processing power or a need to consolidate data from various sources before loading.

On the other hand, ELT reverses this order, with data being extracted and loaded into the target system first and transformation occurring within the destination. ELT is advantageous when the target system, often a data lake or modern data warehouse, can handle the processing power required for transformations efficiently.

ELT is often associated with scalable data lake and lakehouse architectures, while ETL has traditionally been linked with data warehouses. The choice between ETL and ELT depends on factors such as data volume, processing requirements, and the architecture of the underlying systems.

FAQs

What are the challenges of ETL?

ETL can have the following challenges:

- Data complexity: Dealing with diverse data formats and structures can be complex.

- Data quality issues: Ensuring data accuracy and completeness requires ongoing effort.

- Performance optimization: ETL processes can be resource-intensive, especially for large datasets.

How can I ensure the success of my ETL project?

- Clearly define your data requirements and target audience.

- Choose the right ETL tool for your needs.

- Implement data quality checks and procedures.

- Regularly monitor and test your ETL processes.