Tokenization

What is Tokenization?

Tokenization is a masking technique that substitutes sensitive data with randomly generated tokens. These tokens are devoid of any meaningful information and are meaningless without the corresponding mapping system held in a secure vault. The vault is a secure repository linking the tokens to their original data.

The token can access the original sensitive information but cannot decrypt it. It is more secure than normal masking but more complex to implement. This enterprise security solution enhances data privacy and security by ensuring that sensitive details remain shielded from unauthorized access, minimizing data breaches.

How Tokenization Works?

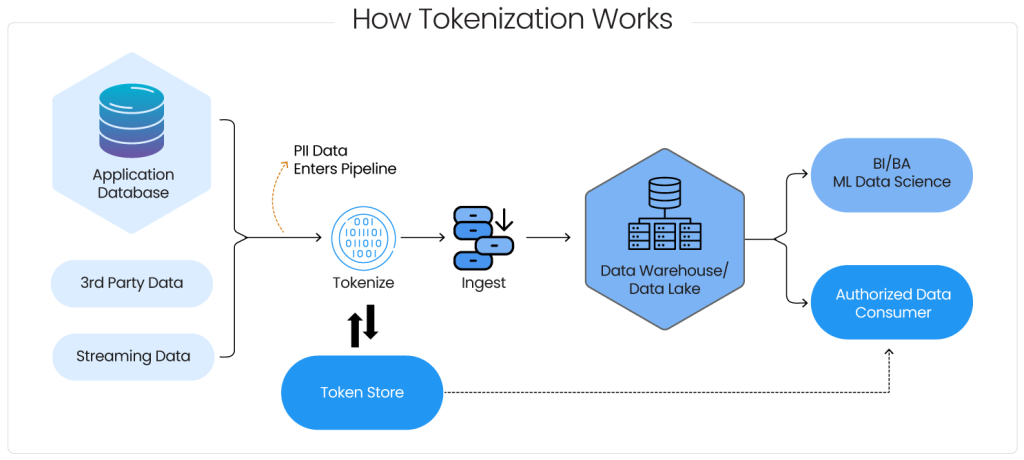

Tokenization is a sophisticated data security method that goes beyond the surface of merely replacing sensitive information with random tokens. Understanding how the masking technique works is crucial for appreciating its effectiveness in protecting data.

Pictorial representation of how Tokenization works

- Data Input: The process is initiated by intercepting sensitive data and creating a secure checkpoint before entering storage or processing systems. This ensures a controlled environment for implementing data masking techniques.

- Token Generation: Once intercepted, sensitive data undergoes tokenization. The tokenizing system uses complex algorithms to generate unique tokens unrelated to the original data and lacking any discernible pattern. The tokenizing system typically employs cryptographic techniques to ensure token randomness and uniqueness.

- Token Repository: The generated tokens are stored in a secure repository called the tokenization vault, mapping each token to its original data. Access is restricted to authorized personnel, safeguarding the correlation between tokens and sensitive data.

- Secure Transmission and Storage: The tokenized data, being non-sensitive, can be transmitted and stored with reduced security concerns. Even in a security breach, the compromised tokens are worthless without the corresponding mapping stored securely in the vault.

- Token Retrieval: Authorized users can retrieve the original data by accessing the vault when required. This process, known as de-tokenization, involves reversing the process and recovering the original sensitive information.

Benefits of Tokenization

Tokenization offers a range of advantages that make it a cornerstone in safeguarding sensitive information. Understanding the benefits is crucial for organizations to fortify their data security and privacy measures.

- Enhanced Security: It significantly enhances data security by replacing sensitive information with tokens. Even if intercepted, these tokens are meaningless without access to the corresponding vault, providing a robust defense against unauthorized access.

- Compliance Assurance: It aids organizations in achieving and maintaining compliance with stringent data protection regulations such as GDPR, PCI DSS, HIPAA, LGPD, PIPL, etc. It ensures that sensitive data is handled consistently with legal and regulatory requirements.

- Secure Transmission and Storage: Tokenized data, being non-sensitive and lacking identifying information, can be transmitted and stored with reduced security concerns. This strengthens enterprise security, mitigating data transmission and storage risks.

- Customizable Access Control: It enables organizations to implement customizable access controls. This ensures that only authorized personnel with explicit permissions can access sensitive information, protecting personally identifiable information (PII) from unauthorized disclosure.

Use Cases

It finds versatile applications across various industries and scenarios. Below are critical use cases highlighting the masking technique’s effectiveness in safeguarding sensitive data.

- Development and Testing: It acts as a pivotal tool in preserving the realism of datasets without compromising privacy in the non-production environment. It allows developers and testers to work with representative data without using actual sensitive information.

- Research and Analytics: It facilitates ethical and privacy-conscious research practices, ensuring the extraction of valuable insights without exposing raw, identifiable information.

- Healthcare Data Protection: In the healthcare sector, where patient records and medical information are pseudonymized to uphold privacy standards while allowing authorized healthcare professionals to access relevant data for treatment and research, tokenization is widely employed.

- Financial Transactions: In the financial industry, it masks personal financial information, such as credit card numbers or account details, to reduce the risk of identity theft and financial fraud while maintaining the functionality of transactional systems.

In conclusion, Tokenization is a pivotal technique within data masking, offering a robust layer of security by replacing sensitive data with randomized tokens. Core features like tokenization-vault, uniqueness, and scalability ensure comprehensive protection of sensitive information across various applications and platforms. Embracing Tokenization signifies a proactive approach toward safeguarding data integrity and privacy in today’s ever-evolving digital landscape.

FAQs

Is tokenization reversible, or can the original data be retrieved from tokens?

Typically, tokenization irreversibly ensures that the original data cannot reconstructed from tokens alone. This minimizes the risk of data exposure, even if intercepting the tokens, enhancing security.

Can organizations use tokenization for all types of sensitive data, including personally identifiable information (PII) and payment card data?

Yes, tokenization, a versatile method, can apply to various types of sensitive data, including PII and payment card data. It provides a robust security measure against unauthorized access and data breaches.

Can organizations seamlessly integrate tokenization into their existing systems and workflows?

Yes, tokenization solutions integrate seamlessly with existing systems and workflows. They often offer APIs and software development kits (SDKs) for easy implementation across various platforms and applications.